There's something to be said about learning new things, and I've really dug in to Stable Diffusion a lot. The learning curve is still steep, but I'm getting a lot better. One of the tools I've been using a lot more recently is the Sketch tool. It allows me to draw in an MS Paint style, add a prompt and get great results out of it.

One of the cards I've created recently started as a simple drawing:

That image was drawn in the software. Now, I did have a memory issue that made the system fail out (I really need a new GPU), so I had to do a screen capture, which is the image above. So I took the capture into Affinity Photo to size it right and did some editing to clean it up a bit more:

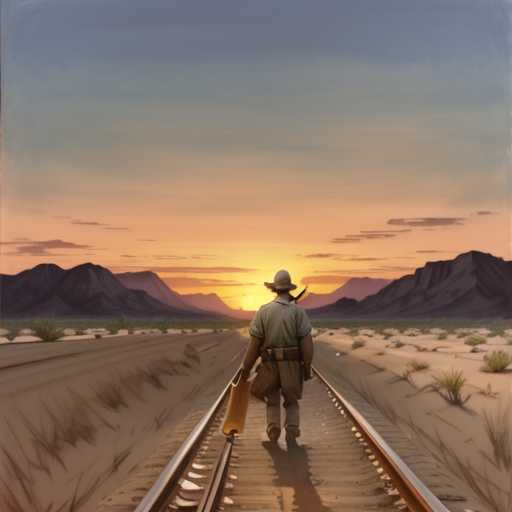

Then I pulled it into the Image to Image function in Stable Diffusion and gave it the prompt: "A sunset with mountains in the desert and train tracks going off into the horizon, painting, dim lighting, simple landscape" with some strict paramaters so it wouldn't deviate too far from the basics of the image above. This is the result:

I ran it through a couple more variations then put this back into the Sketch function:

I used that image in the sketch function again to add the silhouette of a man walking towards the sun, which did give me some results that were very bad, but a couple I could work with:

It took a lot of editing from here to get the proportions right, and there was a lot of back and forth between Affinity and Stable Diffusion, but I did eventually end up with the image that is used in the card:

Overall it was about a three hour process, but that's partially because I was doing other things throughout the process, so in reality it was likely closer to an hour to hour and a half of actual work, including processing time (I really really need a new GPU).

Some of the things that are going to have to be decided sooner rather than later are: at what point does the content become art? At what point is the output of an AI image generator copyrightable? Who is going to be the owner of said copyright?

It's fascinating to me the amount of difference between what I started with and what I ended up with. It takes a lot of manual manipulation of the image and playing with prompts to get these kinds of results. Faster processing can definitely help you get better results with higher detailed prompts and more complex models (did I mention I need a new GPU?), but to get something like this, you do have to intervene in the process if you want to get the results you're after.