A few weeks ago I had no idea how to do anything with AI image generation except type in basic prompts into an online demo model and hope something came out of it that I liked, and if it didn't I would just keep hitting generate over and over again until I got something I wanted with minimal (if any) changes to my prompts.

Then I decided I wanted to do more, and learn the tools. So I installed Automatic1111 onto my computer and started playing around, and I made a lot of garbage. Then I did some reading about the different settings and add-ons like LoRAs, Embeddings and different base models, and I played around with those for a bit, and still made a lot of garbage. So I started looking for other people's prompts, playing with those in addition to the settings and tools I had to learn how they interacted with each other.

Mind you, I had already played around with the image to image function of the tool to do the Shad method of cleanup on poorly photoshopped images, and that actually worked well. What I started working on was actually creating something whole cloth from the AI tool alone without any outside intervention.

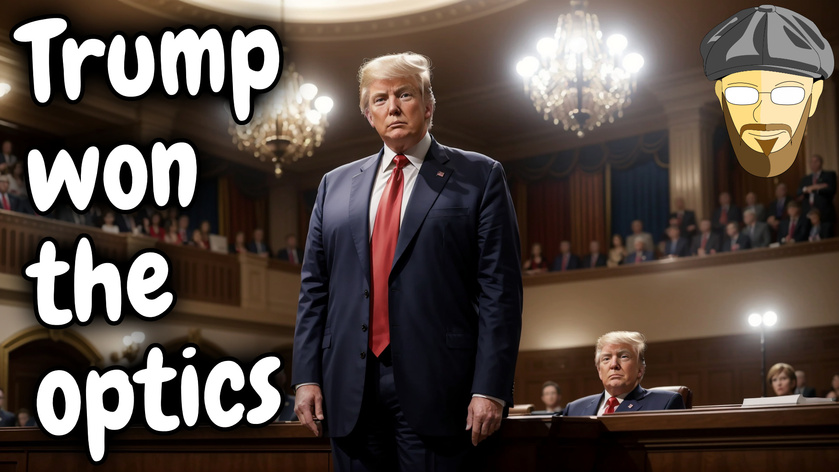

Up until recently, all my artwork had been done in Photoshop and Affinity Photo, including everything I had designed under the Ridgedale Brand name. I started using AI generation online for some of my thumbnails in the method mentioned at the beginning of the article, but I wanted to do more. I wanted to understand the tools, and after playing with other people's prompts, installing and tweaking LoRAs, adding new base models and embeddings, I started to get the hang of things. Then I stumbled onto an article that actually went into detail on building prompts, and there was a ton of great info in there that really helped me start to understand. I learned how to describe what I want, which requires more words and more specificity than I am used to, how to place my various descriptions in the right order to get what I want, and how to weight all of the above with the LoRAs and Embeddings to make everything come together. I went from poorly describing a goblin and blending it together with Chuck Schumer's head to make an image over the course of a few hours:

To being able to generate something very very close to what I wanted over the same time period using nothing but positive and negative prompts and one LoRA:

I won't go into too much detail on the process for these images, but for the Schumer goblin I had to do a lot of manual work in Affinity and in the AI tool to end up with what I got, where I was able to get a boat load of great images while creating that lizard woman, and this is just the best one in the process, and it was part of my learning process anyway, so there was tons of tweaking in the prompting to get it to what it is. The more I do it the better and faster I'll get.

I won't go into too much detail on the process for these images, but for the Schumer goblin I had to do a lot of manual work in Affinity and in the AI tool to end up with what I got, where I was able to get a boat load of great images while creating that lizard woman, and this is just the best one in the process, and it was part of my learning process anyway, so there was tons of tweaking in the prompting to get it to what it is. The more I do it the better and faster I'll get.

When it comes to producing content for the card game that I'm working on, I will be using the mixed method. I want it to be AI assisted art, not AI art. And now that I have learned a lot more about the process, and will continue to do so, once I get all the pieces in place I'll be able to generate a lot of content very quickly, and up to the standards I want, which is far higher than I would have been capable of without this tool.

I will be talking about this process a lot more here and likely on the video channels because that's kind of where the content is going. It won't be the only topic, but it will be the primary replacement for the stories (which conclude at the end of December) and CSRQ when it finally finishes up.

If you want to be involved in some of the creative decision process, I've been putting up polls and having voice chat discussions in Guilded to bounce ideas off of other people. If you're a paid supporter here and you're not in the server, let me know and I'll invite you. If you're not a paid supporter, consider supporting and you can have a bit of a say in the process and see the polls.